Ultrasonic Reveals Syntact – Contact-Free Tactile Music Controller

Ultrasonic has unveiled Syntact, a new musical interface paradigm in the field of human-computer interaction design. The revolutionary technology behind Syntact provides contact-free tactile feedback to the musician. By utilizing airborne ultrasound, a force field is created in mid-air that can be sensed via sonic vibrations. The interface allows a musician to actually feel the sound with its temporal and harmonic texture. While an optical sensor system is interpreting her or his hand gestures, the musician can physically engage with the medium of sound by virtually molding and shaping it – i.e. changing its acoustic appearance – directly with their hands. Think of this as a Roland D-Beam controller, except that you can tactically “feel” the sound. Unreal!

Syntact is operated via optical hand-gesture analysis. An integrated USB camera is used to analyze the hand position in the focal point region of the instrument. Sophisticated image descriptors are then extracted in real-time and converted to MIDI data, which can further be used to drive any desired sound synthesis/processing parameters in any audio production software or hardware environment.

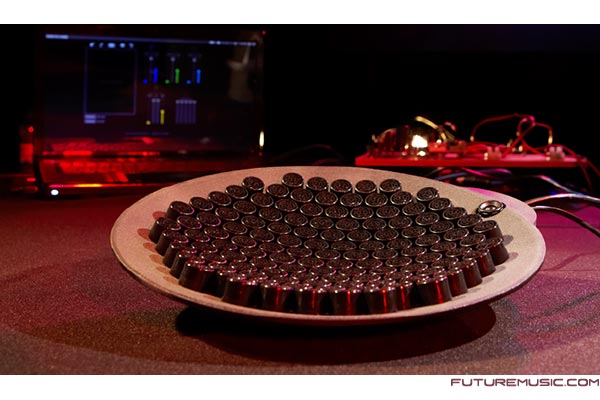

The feedback section of the instrument uses the analog audio output of any software or hardware synthesizer, instrument, sequencer or any kind of audio generator. It uses the audio signal to compute an ultrasound signal mixture that reflects the harmonic and temporal characteristic of the given audio signal. The ultrasound signal mixture is projected through an annular array of 121 ultrasonic transducers, focusing all the acoustic energy in one spot (the focal point).

Syntact comes with the Band in a Hand software allowing you to control the sound through also viusual means. The musician can use the control panel to: Select/activate and visualize different image descriptors – Adjust descriptor scaling – Visualize audio (available only for the integrated mapping solution).

Syntact provides an integrated mapping solution, which allows easy and playful generation of meaningful and diverse musical structures. The generation of sound is based on MIDI files provided by the user. The multi-track files can either be pre-composed by the user or any other existing MIDI tracks can be used. With different hand gestures the musician can then trigger different instruments or instrument groups, which generate output according to the pitch and time information contained in the MIDI files “played” (silently) in the background. The MIDI files therefore only define the possibility of a note taking place at a certain time, which is further conditioned by a combination of image descriptors. While the possible onset times are quantized according to a selected grid of smallest time units (e.g. sixteenth notes), the pitch can also be reorganized in real-time – through different gestures and with regard to an automatic analysis of harmonic progressions in the selected MIDI composition. The result is a musical structure where the pitch is always “right” and all the musical events are always “in time”. The hand gestures define the dynamic variations, temporal density of events and some basic harmonic alterations in the pre-selected / pre-composed piece.